Part of the C++ series:

Introduction

Python is the undisputed champion of machine learning, and for good reason. Its simple syntax allows people from various professions to quickly master it for their specific use cases. As a scripting language, Python facilitates rapid iteration of code blocks in Jupyter Notebooks. Although it’s slow, Python boasts an array of powerful libraries that leverage C and C++ for heavy computation behind the scenes.

So, why consider C++ for building and training artificial neural networks when Python is such a natural choice? In some scenarios, performance and portability requirements render the Python interpreter impractical. For instance, Python is not well-suited for low-latency, high-performance, or multithreaded environments, such as video games or production servers. Additionally, deploying AI on edge devices poses significant challenges due to the intensive memory and compute resources required by deep learning models and the constraints of edge devices. A robot powered by reinforcement learning may handle the computational demands of its models, but mobile robotics often face limitations such as restricted battery power and the need to minimize computational use. While C++ does not entirely solve these issues, it does help mitigate them.

Installation

We will be using LibTorch C++ for this project. It is a C++ frontend that uses the same engine as PyTorch does for Python. This is great for those who know PyTorch as things look very similar between the two. Please be aware this is not a tutorial on training neural networks, simply how to train them in pure C++.

First thing we need to do is setup our project and ensure we have all the libraries we need. I will be doing everything on my m1 macbook pro, so some steps will be different for different machines. I start by creating a new directory, creating my main.cpp file, my lib directory, my cmakelists.txt, and my build directory.

bash1touch torch-cpp

2cd torch-cpp

3touch main.cpp

4mkdir lib

5touch CMakeLists.txt

6mkdir buildNow we need to get the LibTorch C++ library into our project. Go to this link and pick all the criteria for your machine (be sure you are picking LibTorch and C++/Java). If you have a device that supports CUDA, be sure and pick that one for faster training. Download using the provided link and store the download in your lib directory within the project.

Next we need to setup our CMakeLists.txt. If you are not familiar with CMake, it is a popular build system for C++ that will help us easily pull in the LibTorch library into our project for use. You can find out more, including how to install CMAke it on your machine here. This is what my CMakeLists.txt file ended up looking like:

C 1cmake_minimum_required(VERSION 3.18 FATAL_ERROR)

2project(main)

3

4set(CMAKE_OSX_ARCHITECTURES "arm64")

5

6find_package(Torch REQUIRED PATHS ${CMAKE_CURRENT_SOURCE_DIR}/lib/libtorch/)

7message(STATUS "Torch libraries: ${TORCH_LIBRARIES}")

8

9set(RPATH ${CMAKE_CURRENT_SOURCE_DIR}/lib)

10list(APPEND CMAKE_BUILD_RPATH ${RPATH})

11message(STATUS "libomp found: ${RPATH}")

12

13find_package(Threads REQUIRED)

14

15include_directories(${TORCH_INCLUDE_DIRS})

16link_directories(${TORCH_LIBRARY_DIRS})

17

18add_executable(main main.cpp)

19

20target_include_directories(main PRIVATE ./lib)

21target_link_libraries(main "${TORCH_LIBRARIES}" "${CMAKE_THREAD_LIBS_INIT}")

22target_compile_features(main PUBLIC cxx_std_17) # Ensures C++17

23

24if(TORCH_CUDA_VERSION)

25 message(STATUS "CUDA Version: ${TORCH_CUDA_VERSION}")

26 add_definitions(-DUSE_CUDA)

27endif()A couple things worth noting. Personally, I like to bring all my libaries physically into my project which is why we initially downloaded the libtorch into the lib directory in our project. That means I need to tell CMake where to find this library: find_package(Torch REQUIRED PATHS ${CMAKE_CURRENT_SOURCE_DIR}/lib/libtorch/)

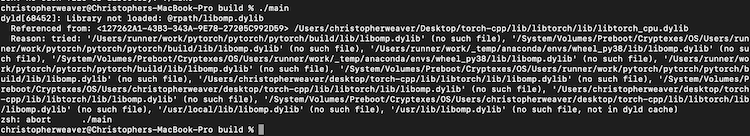

Secondly, I ran into an issue with the LibTorch library running on my mac. C++ kept complaining it could not find the libomp library and would crash at runtime.

I am not entirely sure what is up with that, but I knew I had that library in HomeBrew on my mac, so I just made a copy of the libomp.dylib and added it to my lib directory. I then direct Cmake to link all libraries in that directory so that it successfully gets pulled in. Problem solved. Also, I know I will not have CUDA on my machine, but I did decide to put in some extra checking in my CMakeLists.txt just in case it might be helpful for someone else.

Now lets quickly add some code to our main.cpp file to ensure we are ready to start building. I initialize a simple Tensor to ensure we play nice with LibTorch

C++ 1#include <iostream>

2#include <torch/torch.h>

3

4int main() {

5

6 torch::Tensor foo = torch::rand({12, 12});

7

8 std::cout << "We are ready to begin!" << std::endl;

9

10 return 0;

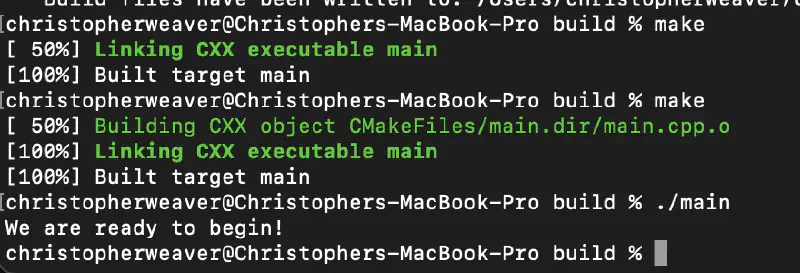

11}In my terminal I next run

bash1cd build

2cmake ..

3make

4./mainIf everything is setup correctly, you should successfully have an executable called main compiled to your build directory. When we run it “./main” we should see “We are ready to begin!” printing to your terminal!

Getting our database

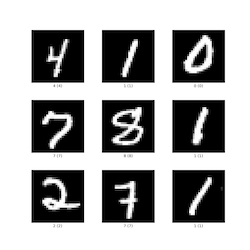

We will be training our model to recognize images of handwritten digits between 0-9. This is called the MNIST dataset

We actually will need four different ubyte files representing the train images, test images, train labels, and test labels. In my project I added a new directory called mnist_data where these files will live. I then added this python script to that directory to download those files (the site these files are served from is notorious for returning 503 errors, if you get this, keep running the script and it will eventually work)

Python 1import requests

2import os

3

4def download_file(url, filename):

5 """ Helper function to download a file from a URL to the specified filename """

6 response = requests.get(url)

7 response.raise_for_status() # Check that the request was successful

8

9 with open(filename, 'wb') as f:

10 f.write(response.content)

11 print(f"Downloaded {filename}")

12

13def download_mnist_ubyte():

14 """ Download the MNIST dataset in UBYTE format """

15 base_url = "http://yann.lecun.com/exdb/mnist/"

16 files = [

17 "train-images-idx3-ubyte.gz",

18 "train-labels-idx1-ubyte.gz",

19 "t10k-images-idx3-ubyte.gz",

20 "t10k-labels-idx1-ubyte.gz"

21 ]

22

23 for file in files:

24 url = f"{base_url}{file}"

25 download_file(url, file)

26

27# Run the function to download the dataset

28download_mnist_ubyte()This script will download the files into our mnist_data directory. The last thing you will need to do is extract the four files and we are good to go.

Lets get to the C++

We are now ready to start writing our C++ code to setup are model architecture, load up our data, and train it to classify these images. First we are going to build out our model.

C++ 1// Define a new Module. Inhereit from the torch nn module

2struct Dense_Net : torch::nn::Module {

3

4 Dense_Net() {

5 // Construct and register two Linear submodules.

6 fc1 = register_module("fc1", torch::nn::Linear(784, 350));

7 fc2 = register_module("fc2", torch::nn::Linear(350, 75));

8 fc3 = register_module("fc3", torch::nn::Linear(75, 10));

9 }

10

11 // Implement the Net's algorithm.

12 torch::Tensor forward(torch::Tensor x) {

13 x = torch::relu(fc1->forward(x.reshape({x.size(0), 784})));

14 x = torch::dropout(x, /*p=*/0.5, /*train=*/is_training());

15 x = torch::relu(fc2->forward(x));

16 x = torch::log_softmax(fc3->forward(x), /*dim=*/1);

17 return x;

18 }

19

20 // Use one of many "standard library" modules.

21 torch::nn::Linear fc1{nullptr}, fc2{nullptr}, fc3{nullptr};

22};So what are doing here is defining out model as a C++ struct that inherits from the torch nn module. It appears we have to use a struct instead of a class otherwise torch complains the parameters member function we need to access later is private. Our model will be comprised of three linear layers of type torch::nn::Linear. When initializing our struct, we assign to each layer the size of the input coming into it and the size of the output coming from it. We also need to register the module with LibTorch. Finally we need to define our forward function. The forward function needs to take a torch::Tensor and will continously modify that Tensor as it executes the function. We call forward on each layer of our model, passing in the tensor, and then we apply the relu activitation function upon the result from that forward operation. On the final layer, we will apply to log_softmax function as a way to obtain the model classification prediction.

Next we will move to our main() function and work on loading up our dataset for training. Luckily for us, the LibTorch library has some specialized features for working with the MNIST dataset specifically.

C++1int main() {

2

3 auto dataset = torch::data::datasets::MNIST("../mnist_data")

4 .map(torch::data::transforms::Normalize<>(0.1307, 0.3081))

5 .map(torch::data::transforms::Stack<>());

6

7 auto data_loader = torch::data::make_data_loader(std::move(dataset),torch::data::DataLoaderOptions().batch_size(64));

8

9}The first thing we are doing is loading our dataset. We tell torch where to find the MNIST dataset and to then normalize each image and to store the entirety as a stack. There are many more things we can do to help pre-process our dataset prior to training, but this is all we really need for this use case.

Next we need to create a dataloader that will batch our data in mini-batches of 64 per examples training iteration. This will help speed up training and help the model generalize what it is learning. Once our data_loader is ready, we can now begin training

C++ 1 // Initialize our model

2 auto net = std::make_shared<Dense_Net>();

3

4 // Set up an optimizer.

5 torch::optim::Adam optimizer(net->parameters(), /*lr=*/0.01);

6

7 // Iterate over the amount of epochs you want to train your data for

8 for (size_t epoch = 0; epoch != 10; ++epoch) {

9

10 size_t batch_index = 0;

11

12 // Iterate the data loader to yield batches from the dataset.

13 for (auto& batch : *data_loader) {

14

15 // Reset gradients.

16 optimizer.zero_grad();

17

18 // Execute the model on the input data.

19 torch::Tensor prediction = net->forward(batch.data);

20

21 // Compute a loss value to judge the prediction of our model.

22 torch::Tensor loss = torch::nll_loss(prediction, batch.target);

23

24 // Compute gradients of the loss w.r.t. the parameters of our model.

25 loss.backward();

26

27 // Update the parameters based on the calculated gradients.

28 optimizer.step();

29

30 // Output the loss every 100 batches.

31 if (++batch_index % 100 == 0) {

32 std::cout << "Epoch: " << epoch << " | Batch: " << batch_index

33 << " | Loss: " << loss.item<float>() << std::endl;

34 }

35 }

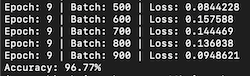

36 }There’s a lot to unpack here, but for those familiar with using PyTorch in Python, this will all seem quite familiar. The comments provided should help clarify things. As we iterate over the data loader, we receive a mini-batch of 64 images from the MNIST dataset. The neural network executes this mini-batch, and then backpropagation is performed as part of the learning process. We log progress to the terminal after every 100 mini-batches processed.

Once training is complete, we assess the effectiveness of our model by testing it against the test dataset, which it has not yet seen. This is an excellent opportunity to determine if our model has truly learned to generalize digit recognition or has merely learned to classify the specific images from the training dataset.

Just like before, we load up a dataset and dataloader, although this time for our test dataset

C++1 // Create the test dataset

2 auto test_dataset = torch::data::datasets::MNIST("../mnist_data", torch::data::datasets::MNIST::Mode::kTest)

3 .map(torch::data::transforms::Normalize<>(0.1307, 0.3081)) // Normalize data

4 .map(torch::data::transforms::Stack<>()); // Stack data into a single tensor

5

6 // Create a data loader for the test dataset

7 auto test_loader = torch::data::make_data_loader(

8 std::move(test_dataset),

9 torch::data::DataLoaderOptions());This should look nearly identical to when we loaded our train dataset except that we pass the parameter torch::data::datasets::MNIST::Mode::kTest which tells torch to load up the correct one.

Next we setup our model to eval() mode and iterate over the test dataset, evaluating the accuracy of its output against the test training labels

C++ 1net->eval();

2

3 int correct = 0; // Count of correct predictions

4 int total = 0; // Total number of samples processed

5

6 // Iterate over the test dataset

7 for (auto& batch : *test_loader) {

8 auto data = batch.data; // Features (input images)

9 auto targets = batch.target.squeeze(); // Targets (true labels)

10

11 // Forward pass to get the output from the model

12 auto output = net->forward(data);

13

14 // Get the predictions by finding the index of the max log-probability

15 auto pred = output.argmax(1);

16

17 // Compare predictions with true labels

18 correct += pred.eq(targets).sum().item<int64_t>();

19 total += data.size(0); // Increment total by the batch size

20 }

21

22 // Calculate accuracy

23 double accuracy = static_cast<double>(correct) / total;

24 std::cout << "Accuracy: " << accuracy * 100.0 << "%" << std::endl;I was able to get an accuracy of 96% after 10 epochs of training on this model, not bad considering its a simple dense neural network.

There are better results to be had though if we instead utilize a convolutional neural network which specializes in vision tasks. We can easily cook one up

C++ 1struct Conv_Net : torch::nn::Module {

2 public:

3 Conv_Net()

4 : conv1(torch::nn::Conv2dOptions(1, 16, 3)), // input channels, output channels, kernel size=3

5 conv2(torch::nn::Conv2dOptions(16, 32, 3)),

6 fc1(800, 128), // Adjusted to 500 based on the calculation

7 fc2(128, 10)

8 {

9 register_module("conv1", conv1);

10 register_module("conv2", conv2);

11 register_module("fc1", fc1);

12 register_module("fc2", fc2);

13 }

14

15 torch::Tensor forward(torch::Tensor x) {

16 x = torch::relu(torch::max_pool2d(conv1->forward(x), 2)); // pool kernel size=2

17 x = torch::relu(torch::max_pool2d(conv2->forward(x), 2)); // pool kernel size=2

18 x = x.view({-1, 800}); // Flatten to 800 features for fc1

19 x = torch::relu(fc1->forward(x));

20 x = fc2->forward(x);

21 return torch::log_softmax(x, 1);

22 }

23

24 private:

25 torch::nn::Conv2d conv1, conv2;

26 torch::nn::Linear fc1, fc2;

27};My convolution model has two convolutional layers, two maxpool layers and two dense layers to reduce our output down to the 10 final values we can do a softmax on. We also need to flatten the tensor coming out of the last maxpool layer into the type of a one dimensional tensor our dense layers can take. Training this for 5 epochs got us up to 98.6%

Alright, now that our model is perfoming well, we probably want to save it for use later

C++1torch::save(net, "net.pt");And finally, loading up the model

C++1torch::load(net, "net.pt");

Unlocking the power of the GPU

Modern deep learning is powered by GPU’s and other hardware specialized for the types of linear algebra these models execute en masse. Luckily, the TorchLib C++ library supports training our models on CUDA powered gps’s right out of the box. This of course wont work on my macbook which does not run on a NVIDIA gpu with CUDA on it, but I was able to test and confirm what I have below does work on my linux machine that does have the hardware for this. Assuming you have the correct version of CUDA for the LibTorch library you installed (for me I am using Cuda 12.4 with libtorch 2.3.0), all we need to do is change a few lines in our C++ file. After we initialize our model, we check to see if our machine has a compatible verison of CUDA on it, if so we initailize a torch::Device object that is defaulted to the cpu, but can be changed to a gpu if the conditional check passes

C++1torch::Device device(torch::kCPU);

2if (torch::cuda::is_available()) {

3 device = torch::Device(torch::kCUDA);

4}Now, all we need to do is ensure our model and the data it will be interacting with is properly moved to this device (hopefully our GPU). Three lines need to reflect this change:

C++1net->to(device);

2auto data = batch.data.to(device);

3auto targets = batch.target.to(device);That is it. With this change our model should successfully train on our GPU. The good news is, even if we do not have a compatible GPU, this code will still work fine since we default to the cpu. Our updated code will look like this:

C++ 1// setup our device contingent upon the machine we are training on

2 torch::Device device(torch::kCPU);

3 if (torch::cuda::is_available()) {

4 device = torch::Device(torch::kCUDA);

5 }

6

7 // Initialize our model

8 auto net = std::make_shared<Dense_Net>();

9 net->to(device);

10

11 // Set up an optimizer.

12 torch::optim::Adam optimizer(net->parameters(), /*lr=*/0.01);

13

14 // Iterate over the amount of epochs you want to train your data for

15 for (size_t epoch = 0; epoch != 10; ++epoch) {

16

17 size_t batch_index = 0;

18

19 // Iterate the data loader to yield batches from the dataset.

20 for (auto& batch : *data_loader) {

21

22 // ensure our data is on the gpu or cpu depending on our machine

23 auto data = batch.data.to(device);

24 auto targets = batch.target.to(device);

25

26 // Reset gradients.

27 optimizer.zero_grad();

28

29 // Execute the model on the input data.

30 torch::Tensor prediction = net->forward(data);

31

32 // Compute a loss value to judge the prediction of our model.

33 torch::Tensor loss = torch::nll_loss(prediction, targets);

34

35 // Compute gradients of the loss w.r.t. the parameters of our model.

36 loss.backward();

37

38 // Update the parameters based on the calculated gradients.

39 optimizer.step();

40

41 // Output the loss every 100 batches.

42 if (++batch_index % 100 == 0) {

43 std::cout << "Epoch: " << epoch << " | Batch: " << batch_index

44 << " | Loss: " << loss.item<float>() << std::endl;

45 }

46 }

47 }If you successfully do get this to train our your gpu, you should see a significant speedup in training time (especially for the convolutional neural network).

Please do heed my warning that getting CUDA to work on your machine for deep learning is not for the faint of heart. There is no simple process to get that up and running, even when you do have the correct hardware. Previous to writing this post, I was running CUDA 10 on my linux machine, which was not compatible with libtorch, and it took me a good couple of hours to struggle my way through removing CUDA 10 and then installing CUDA 12.

Conclusion

There are thousands of tutorials available that teach how to train a neural network to accurately classify the MNIST dataset using Python. This popularity is well-founded, as Python offers an impressive array of tools for this purpose. However, there’s an emerging need for edge devices that can harness the power of these neural networks. Using C++ for such applications provides the efficiency and low memory footprint that these devices critically require. As we move forward, it’s likely that developers will increasingly turn to C++ to meet the stringent performance demands of edge computing environments.